Memory: Commodity or Scarcity?

A Great Misunderstanding of the Decade

Disclosure: I first drafted this ~2 months ago but finally got around to posting it after there has been a huge run up in memory. Sigh. Although my machine economy piece did allude to it!

I’ve spent the past few months thinking about memory and also investing in it. Computer memory is something we’ve all taken for granted for many years but will see it’s importance in the coming year.

To give you context on how important this is: all consumer electronics and computers will go up in price in 2026 while being worse in specs.

Before we speak about the current day opportunity, lets go down history lane of what memory is and where it’s come from.

Origins

Before we got to the present (HBM - High Bandwidth Memory) we need to understand what memory is and where it started. Memory is basically working memory, but for computers. Generally speaking you want a computer to:

Store lots of information

Access it really quickly

Unfortunately, both of these are at opposite ends when it comes to physics. You can’t have something be really quick to access while also being extremely large in it’s storage capacity. It’s like saying you want a warehouse that is really large but you can get any parcel in a split second. They just don’t work together.

So computers separated this out into two types of “memory”

Storage memory

Working memory

The second class is what we’re focusing on here and started of with RAM (random access memory). You see when a CPU (central processing unit) does work, it needs to store information temporarily while it does work. RAM solved the problem by acting as a kind of scratch pad, kind of like when you do arithmetic in your own brain. A temporary memory of sorts.

However as our CPUs became more powerful, we needed to store more information in our memory chips and the industry evolved to what’s known as DRAM (dynamic random access memory). By being able to pack more transistors and capacitors, we could grow the use cases of computing as the working memory available to computers expanded massively. This gave birth to:

Larger programs

Richer operating systems

Virtual memory

Multiprogramming

Databases

Eventually GUIs, browsers, and modern software

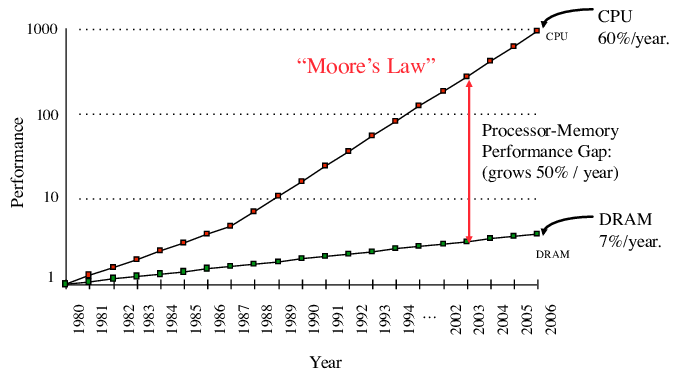

However, our needs didn’t slow down. They never do and we started to see the following divergence:

Now this wouldn’t have been a problem if we stayed in the current paradigm of computing where CPUs dominated. That’s because the bottleneck didn’t become around memory size, it ended up being memory bandwidth!

The Rise of High Performance Computing

When you write a general purpose computing application, you can just give it to your computer and it will figure out how to allocate CPU and RAM. However, CPUs aren’t enough to do very high performance computing. For that you need a new beast, a GPU (Graphic Processing Unit). GPUs are different to CPUs in the fact they’re design to do very simple math operations but at enormous scale. A CPU is designed to do more complex tasks but with less parallel operations (maybe 128 parallel threads?) versus a GPU that tens of thousands of threads. In this scenario, the bottleneck doesn’t become memory but rather bandwidth because how much compute you can do depends on how quickly you can store/retrieve information after your tiny math operations are finished.

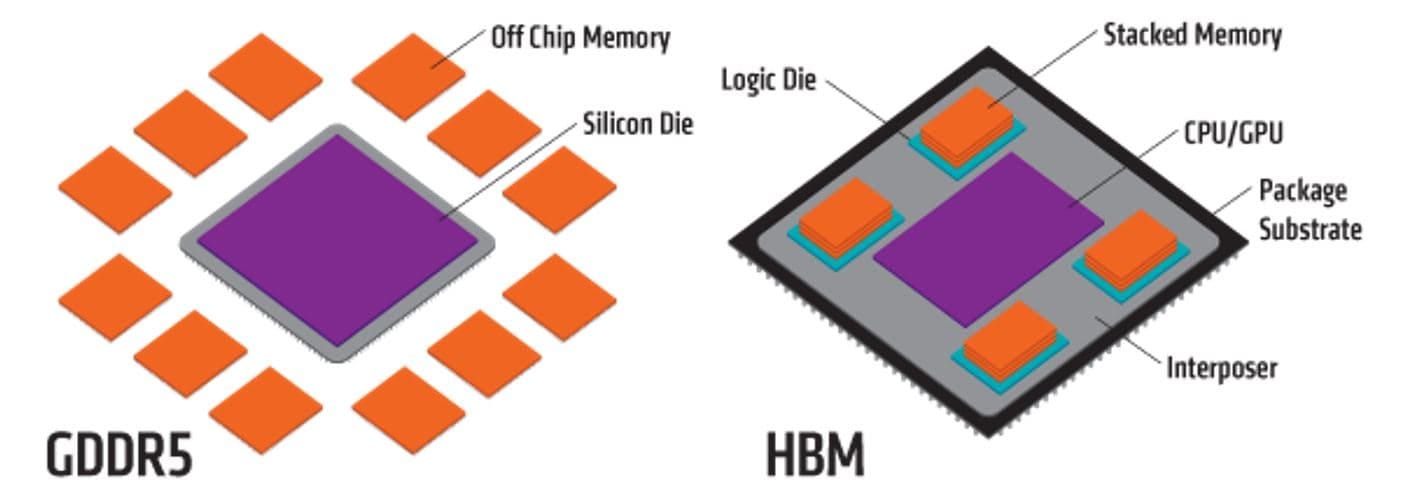

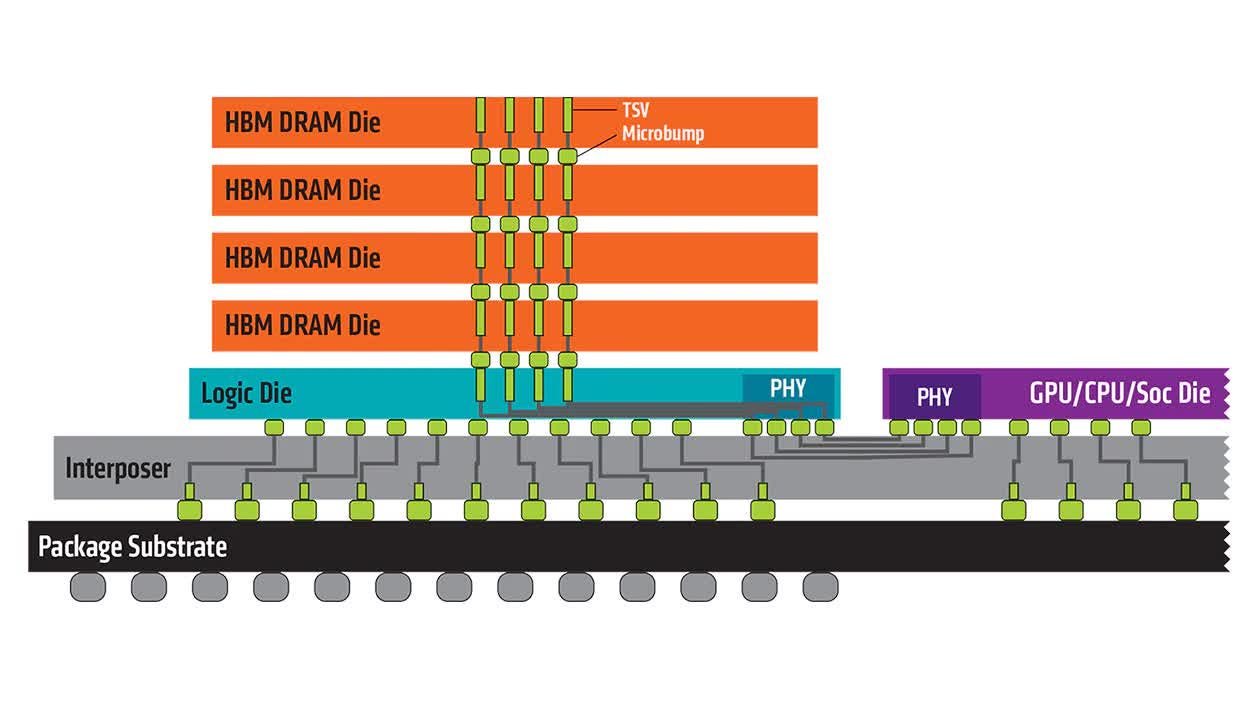

So how do we actually do this? Well researchers in South Korea came up with what is known as High Bandwidth Memory (HBM). The difference is that rather than stacking the memory around the GPU you stack it on top which reduces the physical distance as the distance horizontally is less than the distance vertically.

The image below hopefully illustrates this point.

To better showcase how HBM looks like here’s an alternative view point:

Now that means that the memory itself is much more integrated with the whole GPU stack and requires more advanced packaging. You can’t just rip out some HBM from a machine and stick it in another, it is very closely tied to the GPU itself. Why go through the trouble? Well for context:

Regular DDR4 RAM can maybe throughput around 100GB/s

High end HBM can throughout at least 1TB/s, sometimes even 3+ TB/s!

That 10x in bandwidth means your GPUs can move onto processing the next things rather than waiting to finish their compute. You get more juice out of your expensive GPU chips.

This means that they’re not commodities like your typical DRAM sticks I showed at the start of the article. These are much more specialised pieces of hardware. Even DRAM sticks that I showed are not easy to make given the requirements of the latest generation of DDRM4/5 RAM!

The Opportunity

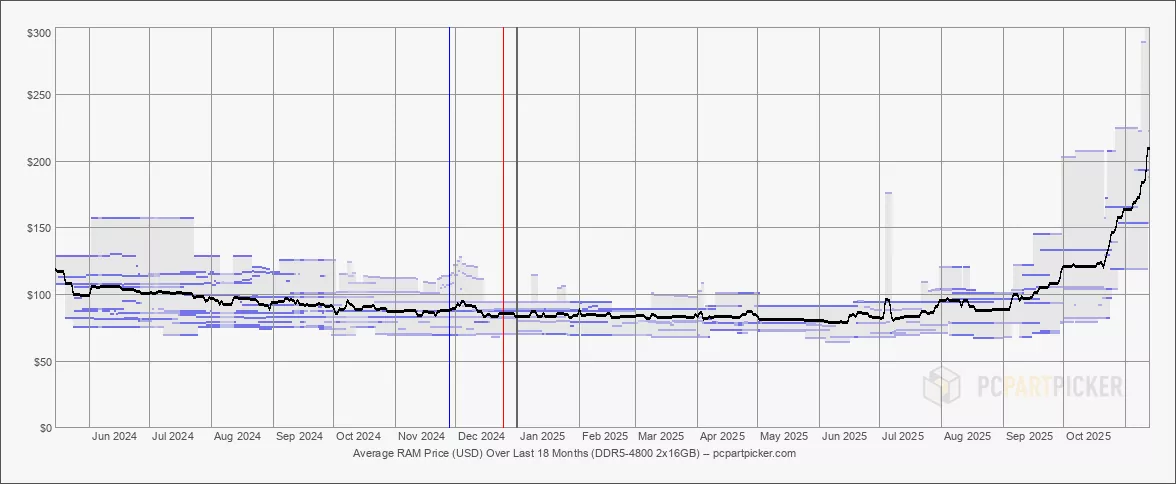

In September of 2025, something very interesting happened… the price of DDR5 ram started increasing rapidly!

As you can see in the chart above, around September 2025 we see DDRM5 ram went up from $100 to $200 a stick. What happened you may ask? Well the AI thing…

But can’t we just make more of it? Not exactly.

There’s only 3 companies in the world that are good at making this stuff:

SK Hynix (market leader)

Samsung (strong secondary contender)

Micron (US based)

Outside of these three companies that have been making RAM for decades, there are no other options. There is no competition.

The cost to compete is tens of billions of dollars and at least a decade of experience. This is not easy stuff by ANY means. China’s state backed entity is still behind the west in this department too and even if they did succeed, we wouldn’t purchase it as it would be a security risk.

But okay, wouldn’t the existing players just make more if there is so much demand? This is where we need to put on our economics hat and game theory hat.

First of all, the companies that I listed above are public (and yes, I have invested in all of them) so that means that they have obligations to report their earnings, spend, future plans every quarter.

Second of all, these semiconductor companies trade at price/earnings ratios of 10-15. Why is that? Well they’ve been burned in the past due to excess demand, building for that demand, and then the demand evaporating. This is exacerbated by the fact that a foundry has on-going running costs that erode cash and require debt to maintain.

Third of all, the above factors means that the market views memory as a commodity that is cyclical, punishing it through a low p/e and high skepticism.

That last sentence is where the opportunity lies because that is empirically wrong. HBM is not a commodity as it requires extreme specialisation to manufacture. Secondly, around demand: HBM is sold out till 2026. 2028 is already looking the earliest higher manufacturing capacity is going to come online. However, by the time more capacity comes online the models are going to get even larger and more resource demanding requiring larger context windows. I’ve written about larger context windows and how they scale quadratically rather than linearly in this article:

All of this is to say that memory demand is not going away anywhere. The challenge you’re going to face is actually buying these companies after they have run up 200-500% in value in the past year. You could think there will be a major retrace and you’ll get your chance but the risk you run is seeing it run up higher as there are no real reasons these companies will experience material changes in their underlying demand and subsequent cashflows.

My favourite out of the contenders ranked is:

SK Hynix: dominant market leader in HBM controlling 60%+ of marketshare. Forward P/E ratio of ~7/8 and expected to list on US exchanges via an ADR which will pump the addressable market of investors.

Micron: trades at a forward P/E ratio of ~10 and US based. Makes HBM, SSDs and more. Has only run up 250% in the past year. Still has 2-5x upside imo. Even if the market doesn’t give it a higher P/E ratio, the earnings will expand the valuation.

Sandisk: probably the hardest of them all to own as it’s run up 500% and the math around NAND/Flash memory defensibility is questionable. Still a massive supply shortage. If High Bandwidth Flash becomes real then Sandisk is a steal. They’re collaborating with Hynix on this so TBD.

Other mentions: Seagate and Western Digital. While not being memory, they’re storage which is also in a huge shortage as the amount of data generated explodes.

Disclaimer: I own all of the above and intend to hold them for at least another 1-2 years as the memory shortage persists globally.

Broader Notes

I see a very interesting opportunity in the market more broadly speaking as being a techno investor who understands the underlying technology first and then the financials afterwards. There are other sectors of the AI build out that I see have similar mis-priced opportunities that I intend to invest my capital into. Every 3 months I am continually impressed by the increased capabilities of the models and until I see a slow down in capabilities and modalities, I am very long on this thesis.