Starkware Airdrop Analysis

A study on criteria, eligibility and claim criteria

Following on from my last article around the multiple airdrops by Optimism, I wanted to take a look at Starkware’s airdrop since I managed to extract the data at the same time. The key difference between Starkware and Optimism’s airdrop I wanted to study was how the claim mechanism would impact that criteria. This data is approximately a month stale now but won’t be too far off from the actual numbers given the airdrop was done multiple months ago.

Claim vs Drop Model

The key difference between the two approaches were Optimism said “we will personally deliver the airdrop to your wallet” where as Starkware says “come to us to claim your airdrop”. The case for the former is that it’s easier for users and saves on gas. My personal philosophy is that if you’re doing this on a low cost chain (that’s what your valuation is predicated on right?!) then cost shouldn’t be an issue and the least someone can do to claim free money is click a button.

That being said, lets take a look at the Starkware drop. Unfortunately the data was extremely challenging to get because:

Starkware didn’t publish data breaking down how people claimed the airdrop after the airdrop

Starkware doesn’t have standard EVM format addresses (they’re much longer than 20 bytes) which means I had to hack to get data available on-chain.

Anyways, here’s the official chart around how the airdrop was allocated:

Data Gathering

To get the data I needed, I basically used:

0x06793d9e6ed7182978454c79270e5b14d2655204ba6565ce9b0aa8a3c3121025as my airdrop to get all the claim events from0x00ebc61c7ccf056f04886aac8fd9c87eb4a03d7fdc8a162d7015bec3144c3733as my starting block hash0x04718f5a0fc34cc1af16a1cdee98ffb20c31f5cd61d6ab07201858f4287c938das the contract to get STRK balances from

Some of fun snippets of me having to get balances through many for loops and byte hacking to get the data I wanted.

Anyways, at the time of extraction, I found 519,282 events on the claim contract. There were a total of 1,304,079 claimers meaning only 39.8% claimed the airdrop. The remaining users were basically used as marketing collateral — which I think is a good outcome! Some may say this was bad but if you can get the message out to the broadest base of people while still not giving away everything, you’ve kind of found the sweet spot. The wide criteria made most people feel included which generates good-will amongst the community.

Analysis Time

So basically what I did was get all the addresses from the claim events that I got and then ran a script to get all their balance at the time of me running the script. I could then see what the buckets of balances were by segmenting them out. I wish I could have further understood these users but the limited data made this a much more challenging exercise.

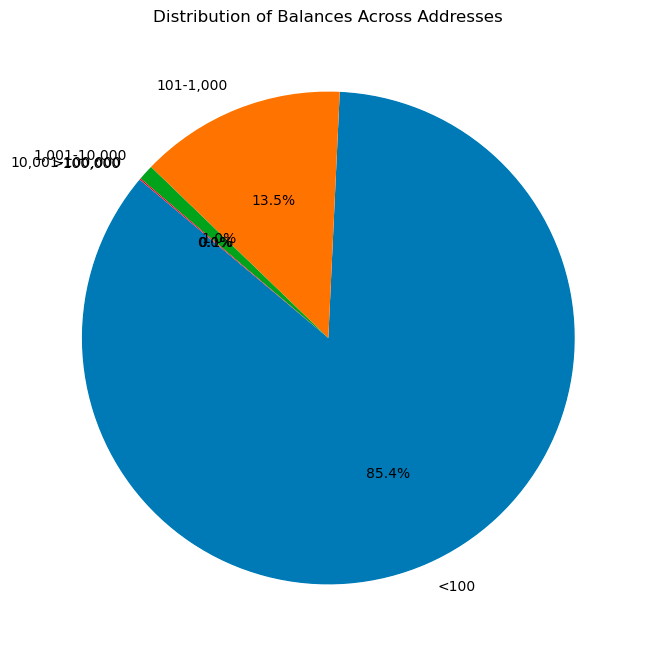

Without further ado, here are the results! I used less than 100 STRK as a threshold as the smallest airdrop given was a 111.1 STRK. Here’s a breakdown of the amounts:

StarkEx Users: 111.1 STRK each

Open Source Developers: 111.1 STRK each

Starknet Users: Range from 500 to 10,000 STRK, with varying multipliers

Starknet Community Members: Range from 10,000 to 180,000 STRK

Starknet Developers: 10,000 STRK each

Ethereum Staking Pools: 360 STRK per validator

Solo Stakers: 1,800 STRK per validator, up to 3,200 STRK for those with higher risk profiles

Ethereum Developers: 1,800 STRK each

Protocol Guild Members: 10,000 STRK each

EIP Authors: 2,000 STRK each

To further defend my choice of the 101 bucket, the total amount by held this group is: Total recipient amount for '<100' bucket: 1,896,317.6861687868 / less than 3%.

Overall, not a very good airdrop! A 13.5% retention rate is close to the industry norm (which is bad). However, given a normal Github user like me was given 1800 STRK, my deeper take at this is that the airdrop is worse than you’d hope! Only 1.1% of users who were given anything substantial, retained! I’m a bit on the fence as the interpretation for this airdrop can go both ways. However, lets look at other data points to help determine whether this airdrop was a success or not.

An easy proxy is token. Here’s the 3 month chart of the STRK token. Down 50%, but also there was a big market sell-off. Not great but at least it’s not down 90%?

Lets look from another angle: TVL. At least our friends over at DeFi Llama can help with this exercise.

TVL rose to around $320m and then dropped to around $210m which is pretty good retention. However, we don’t know how much Starkware gave out to get these numbers. Luckily I have the numbers. That numbers is 67,078,250.942674.

If we assume an average token price of $1.50

We can re-express this equation as Starkware spent $100,617,376 to acquire ~$300m in TVL

Or put another way, about $3 in STRK tokens to acquire $1 in TVL

My next question is what are the user counts like so we can understand a CAC model for this equation. I re-drew my chart above with percentages into user counts.

Alright so giving Starknet the benefit of the doubt here and only counting the less than 100 bucket. We’ve spent close to $100m to acquire 519,282 users. This translates to ~$200 per user. Now if we re-express this in terms of retained users (above 101 tokens), we get $1,341 per retained wallet.

This is lower than what we saw on the Arbitrum airdrop and others where retained CAC is in the high thousands of dollars or even tens of thousands of dollars. While Starkware’s airdrop wasn’t great from a retention perspective, it was decent from a CAC perspective relative to others I’ve seen. My thesis for this is similar to what we saw on the Optimism airdrops:

Distributing tokens based on a diversified attribute criteria leads to superior returns

Closing

Starkware was relatively thoughtful in how they gave lots of tokens to lots of different groups and the data shows that clearly. This is a common theme I’m seeing in airdrops that perform well versus those that don’t.

So why don’t more projects don’t choose diverse user attributes to airdrop tokens to users? Well it comes down to the fact that collecting, analyzing and drawing conclusions from data is hard — especially when you have vasts amounts of it. Starkware managed to use a relatively simple criteria that still ensured diversity although there are ways to get even more targeted with the right tools.

I have many thoughts on this that I’ll be writing in upcoming articles but for now, I’ll leave you with this clue to the airdrop puzzle: data is the biggest limitation, although in ways very few can see.