The Airdrop Trilemma: A Data Problem in Disguise.

A deep dive into airdrop capital efficiency, decentralisation & retention.

Recently, Starkware initiated their much-awaited airdrop. Like, most airdrops it resulted in a ton of controversy. Which in a tragic way, doesn't really surprise anyone anymore.

So why is it the case that this keeps on happening over and over again? One might hear a few of these perspectives:

Insiders just want to dump and move on, cashing out billions

The team didn't know any better and didn't have the right counsel

Whales should have been given more priority since they bring TVL

Airdrops are about democratising what it means to be in crypto

Without farmers, there is no usage or stress testing of the protocol

Misaligned airdrop incentives continue to produce strange side effects

None of these view is wrong, but none of these perspectives are completely true by themselves. Let's unpack a few takes to make sure we have a comprehensive understanding of the problem at hand.

There exists a fundamental tension when doing an airdrop, you're choosing between three factors:

Capital Efficiency

Decentralisation

Retention

You often end up in a scenario where airdrops strike well in 1 dimension, but rarely strike a good balance between even 2 or all 3. Retention in particular is the hardest dimension with anything north of 15% typically unheard of.

Capital efficiency is defined as the criteria used to how many tokens you give to a participant. The more efficiently you distribute your airdrop, the more it will become liquidity mining (one token per dollar deposited)—benefiting whales.

Decentralisation is defined as who gets your tokens and under what criteria. Recent airdrops have adopted the method of going for arbitrary criteria in order to maximise the coverage of who gets said tokens. This is typically a good thing as it saves you from legal troubles and buys you more clout for making people rich (or paying for their parking fines).

Retention is defined as how much do users stick around after the airdrop. In some sense it's a way to gauge how aligned were your users with your intent. The lower the retention, the less aligned your users were. 10% retention rates as an industry benchmark mean only 1 in 10 addresses are actually here for the right reasons!

Putting retention aside, lets examine the first 2 in more detail: capital efficiency and decentralisation.

To understand the first point around capital efficiency, let's introduce a new term called the "sybil co-efficient". It basically calculates how much you benefit from splitting one dollar of capital across a certain number of accounts.

Where you lie on this spectrum will ultimately be how wasteful your airdrop will become. If you have a sybil coefficient of 1, it technically means you're running a liquidity mining scheme and will anger lots of users.

However when you get to something like Celestia where the sybil coefficient balloons out to 143, you're going to get extremely wasteful behaviour and rampant farming.

Decentralisation

This leads us to our second point around decentralisation: you want to ultimately help "the small guy" who is a real user and taking the chance to use your product early -- despite them not being rich. If your sybil coefficient reaches too close to 1 then you're going to be giving close to nothing to the "small guy" and most if it to the "whales".

Now this is where the airdrop debate becomes heated. You have three classes of users that exist here:

"small guys" who are here to make a quick buck and move on (maybe using a few wallets in the process)

"small guys" who are here to stay and like the product you've made

"industrial-farmers-who-act-like-lots-of-small-guys" here to absolutely take most of your incentives and sell them before moving to the next thing

3 is the worst, 1 is still kind of acceptable and 2 is optimal. How we differentiate between the three is the grand challenge of the airdrop problem.

So how do you solve for this problem? While I don't have a concrete solution, I have a philosophy around how to solve this that I've spent the past few years thinking about and also observing first-hand: project-relative segmentation.

I'll explain what I mean. Zoom out and think about the meta-problem: you have all your users and you need to be able to divide them up into groups based on some sort of value judgement. Value here is context-specific to the observer so will vary from project to project. Trying to ascribe some "magical airdrop filter" is never going to be sufficient. By exploring the data you can start to understand what your users truly look like and start to make data-science based decisions on what the appropriate way to execute your airdrop is through segmentation.

Why does no one do this? That's another article that I'll be writing in the future but the very long TLDR is that it's a hard data problem that requires data expertise, time and money. Not many teams are willing or able to do that.

Retention

The last dimension that I want to talk about is retention. Before we talk about it, it's probably best to define what retention means in the first place. I'd sum it up to the following:

``

number of people who an airdrop is given to

---------------------------------------------

number of people who keep the airdrop

What most airdrops make the classic mistake of is making this a one-time equation.

In order to demonstrate this, I thought some data might help here! Luckily, Optimism has actually executed on multi-round airdrops! I was hoping I'd find some easy Dune dashboards that gave me the retention numbers I was after but I was unfortunately wrong. So, I decided to roll up my sleeves and get the data myself.

Without overcomplicating it, I wanted to understand one simple thing: how does the percentage of users with a non-zero OP balance change over successive airdrops.

I went to: https://github.com/ethereum-optimism/op-analytics/tree/main/reference_data/address_lists to get the list of all addresses that had participated in the Optimism airdrop. Then I built a little scraper that would manually get the OP balance of each and every address in the list (burned some of our internal RPC credits for this) and did a bit of data wrangling.

Before we dive in, one caveat is that each OP airdrop is independent of the prior airdrop. There's no bonus or link for retaining tokens from the previous airdrop. I know the reason why but anyways let's carry on.

Airdrop #1

Given to 248,699 recipients with the criteria available here: https://community.optimism.io/docs/governance/airdrop-1/#background. The TLDR is that users were given tokens for the following actions:

OP Mainnet users (92k addresses)

Repeat OP Mainnet users (19k addresses)

DAO Voters (84k addresses)

Multisig Signers (19.5k addresses)

Gitcoin Donors on L1 (24k addresses)

Users Priced Out of Ethereum (74k addresses(),

After running the analysis of all these users and their OP balance, I got the following distribution. 0 balances are indicative of users who dumped since unclaimed OP tokens were sent directly to eligible addresses at the end of the airdrop (as per https://dune.com/optimismfnd/optimism-airdrop-1).

Regardless, this first airdrop is surprisingly good relative to previous airdrops executed that I've observed! Most have a 90%+ dump rate. For only 40% to have a 0% balance is surprisingly good.

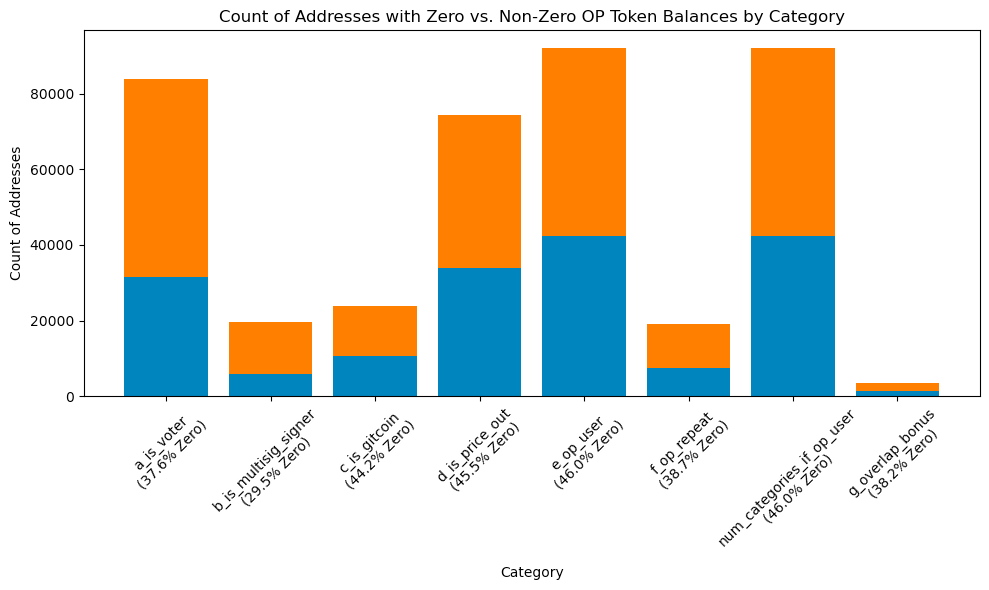

I then wanted to understand how each criteria played a role in determining whether users were likely to retain tokens or not. The only issue with this methodology is that addresses can be in multiple categories which skews the data. I wouldn't take this at face value but rather a rough indicator:

One time OP users had the highest percentage of users with a 0 balance, following users who were priced out of Etheruem. Obviously these weren't the best segments to distribute users to. Multisig signers were the lowest which I think is a great indicator since it's not obvious to airdrop farmers to setup a multi-sig where you sign transactions to farm an airdrop!

Airdrop 2

This airdrop was distributed to 307,000 addresses but was a lot less thoughtful imo. The criteria was set to the following (source: https://community.optimism.io/docs/governance/airdrop-2/#background):

Governance delegation rewards based on the amount of OP delegated and the length of time it was delegated.

Partial gas rebates for active Optimism users who have spent over a certain amount on gas fees.

Multiplier bonuses determined by additional attributes related to governance and usage.

To me this intuitively felt like a bad criteria because governance voting is an easy thing to bot and fairly predictable. As we'll find out below, my intuition wasn't too off. I was surprised just how low the retention actually was!

Close to 90% of addresses held a 0 OP balance! This is your usual airdrop retention stats that people are used to seeing. I would love to go into this deeper but I'm keen to move to the remaining airdrops.

Airdrop 3

This is by far the best executed airdrop by the OP team. The criteria is more sophisticated than before and has an element of "linearisation" that was mentioned in previous articles. This was distributed to about 31k addresses, so smaller but more effective. The details are outlined below (source: https://community.optimism.io/docs/governance/airdrop-3/#airdrop-3-allocations):

OP Delegated x Days= Cumulative Sum of OP Delegated per Day (i.e. 20 OP delegated for 100 days: 20 * 100 = 2,000 OP Delegated x Days).Delegate must have voted onchain in OP Governance during the snapshot period (01-20-2023 at 0:00 UTC and 07-20-2023 0:00 UTC )

One critical detail to note here is that the criteria for voting on-chain is AFTER the period from the last airdrop. So the farmers that came in the first round thought "okay, I'm done farming, time to move on to the next thing". This was brilliant and helps with this analysis because look at these retention stats!

Woah! Only 22% of these airdrop recipients have a token balance of 0! To me this signals the waste on this airdrop was far less than any of the previous ones. This plays into my thesis of retention being critical and additional data that having multi-round airdrops has more utility than people given credit for.

Airdrop 4

This airdrop was given to a total of 23k addresses and had a more interesting criteria. I personally thought the retention of this would be high but after thinking about it I have a thesis for why it was probably lower than expected:

You created engaging NFTs on the Superchain. Total gas on OP Chains (OP Mainnet, Base, Zora) in transactions involving transfers of NFTs created by your address. Measured during the trailing 365 days before the airdrop cutoff (Jan 10, 2023 - Jan 10, 2024).

You created engaging NFTs on Ethereum Mainnet. Total gas on Ethereum L1 in transactions involving transfers of NFTs created by your address. Measured during the trailing 365 days before the airdrop cutoff (Jan 10, 2023 - Jan 10, 2024).

Surely you'd think that people creating NFT contracts would be a good indicator? Unfortunately not. The data suggests otherwise.

While it isn't as bad as Airdrop #2, we've taken a pretty big step back in terms of retention relative to Airdrop #3.

My hypothesis is if they did additional filtering on NFT contracts that were marked as spam or had some form of "legitimacy", these numbers would have improved significantly. This criteria was too broad. In addition, since tokens were airdropped to these addresses directly (rather than having to be claimed) you end up in a situation where scam NFT creators went "wow, free money. time to dump".

Closing

As I wrote this article and sourced the data myself, I managed to prove/disprove certain assumptions I had that turned out to be very valuable. In particular, that the quality of your airdrop is directly related to how good your filtering criteria is. People that try to create a universal "airdrop score" or use advanced machine learning models will fail prone to inaccurate data or lots of false positives. Machine learning is great until you try to understand how it derived the answer it did.

While writing the scripts and code for this article I got the numbers for the Starkware airdrop which is also an interesting intellectual exercise. I'll write about that for next time's post. The key takeaways that teams should be learning from here:

Stop doing one-off airdrops! You're shooting yourself in the foot. You want to deploy incentives kind of like a/b testing. Lots of iteration and using the past's learnings to guide your future aim.

Have criteria that builds off past airdrops, you're going to increase your effectiveness. Actually give more tokens to people that hold tokens on the same wallet. Make it clear to your users that they should stick to one wallet and only change wallets if absolutely necessary.

Get better data to ensure smarter and higher quality segmentation. Poor data = poor results. As we saw in the article above, the less "predictable" the criteria, the better the results for retention.

If you're actively thinking of doing an airdrop or want to jam about this stuff, reach out. I spend all my waking hours thinking about this problem and have been for the past 3 years. The stuff we're building directly relates to all of the above, even if it doesn't seem so on the surface.

Side note: I've been a bit out of the loop with posting due to poor health and lots of work. That means content creation typically ends up sliding off my plate. I'm slowly feeling better and growing the team to ensure I can get back to having a regular cadence here.

Interesting article. We at Holonym are also trying to solve the airdrop problem through zk Personhood. Would love to know your thoughts on DID as a foundation layer to make rest of the filtering.